R-Pi based Piano Training Results Assessment Tool

Jinxuan Wang NetID: jw2552

Haolin Li NetID: hl992

Introduction

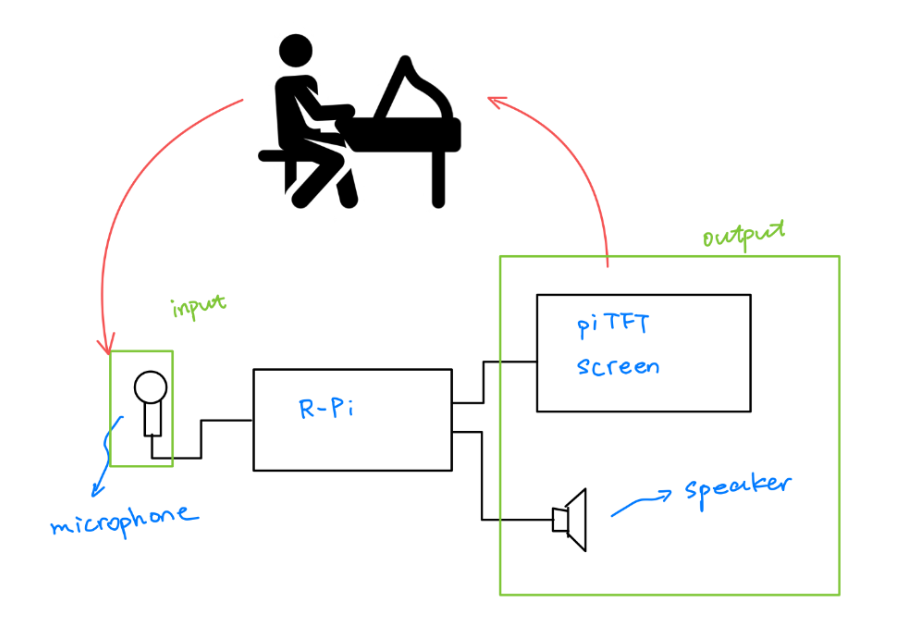

This project is an embedded system that uses a Raspberry Pi (RPi) as the central component for data and signal processing. The system will use an USB microphone as the input device and a piTFT screen as output device. The user will load a sample piano score into the system before starting, and the system will "listen" to the user playing the piano with the microphone and compare each single input note with the sample note one at a time. If the input is different from the sample (e.g., played higher or lower than the loaded score), the screen will display the mistake in real time.

The goals of this project are for the system to be able to compute the input sound clip into the frequency domain, compare the input frequencies to the fundamental frequencies of notes, and output the correctness of the current note. This will allow the user to improve their piano playing by providing “real-time” feedback on their mistakes. In the end, the program would display the total percentage of correct notes and compare this percentage to the last training session.

The elements of this project include the following hardware: PiTFT screen and USB microphone. The PiTFT screen will be used to display the output. The microphone will be used to capture the input sound.

Objective

1. The USB microphone could be able to record the sound when the user is playing the piano at a decent resolution and speed.

2. The recorded sound clip will be processed by Fast Fourier Transform to get the frequencies inside the sound clip. The FFT should output the correct frequency the user played. Be exact, the frequency with the highest intensity (power) will be extracted in a unit of Hertz.

3. The analyzed frequency will be compared to the predefined fundamental frequencies of piano notes, at a sequence that was defined by the user loaded music score. The frequencies will be compared one at a time, from the first note to the last note of the loaded score.

a. If the input frequency falls into the boundary (± 3Hz) of the corresponding note’s fundamental frequency, the system will assign this note as correct. A match status of “good” will be assigned.

b. If the input frequency falls outside the boundary, the program will check whether it is higher or lower than the corresponding note, and assign either “high” or “low” to this note.

c. When the scores are completed, the system will calculate the percentage of correctly played notes and display this percentage on the piTFT screen.

d. The system will compare the current training result with the last training session. A message will be displayed to show whether the user has improved or regressed in this session of training.

4. When all the notes after a single input note were compared with the note from the loaded score, the system will display the current note’s match status on the piTFT screen (“good”, “high”, or “low”). And two logs of notes, one for the predefined music score and one for the input notes will be displayed accordingly. The two logs will contain the most recent five notes.

5. When the scores are completed, the system will calculate the percentage of correctly played notes and display this percentage on the piTFT screen.

6. The system will compare the current training result with the last training session. A message will be displayed to show whether the user has improved or regressed in this session of training.

Objective for our project is to have the system be able to first display a “real-time” feedback on each single note to the user (match, high, or low) and record the 5 most recent notes logs. In the end, the system would be able to display an overall score and compare it with the last session.

Project Design

The piTFT display was developed based on the Pygame package. The background will be filled with black and logs will be displayed in white characters. Current note’s correctness will be displayed in the middle of the screen inside a colored circle correspondingly. After the program starts, it will be on a screen to ask the user to press the start button to start training, or press the end button to exit the program. The user can leave the program at any moment by pressing the stop button.

The touch screen was disabled. Buttons are physical buttons on the right side of the piTFT screen. The one in the bottom right is start and the one in the upper right is end. We set up these 2 buttons using GPIO to the RPi.

The fundamental frequencies of piano notes were defined and stored in a 2D array: frequency was stored as float point and the name of the note was stored as string. User loaded music scores will also be stored in the same structure.

For the input stage, the availability of the USB microphone will be checked. If the mic was plugged and available, the program will continue; if not, an error message will be displayed and the program will be ended. Then, the mic will record the sound each with some specific and adjustable time window: larger the window, the slower the score be played, and vice versa. The recording will be done using the Pyaudio package, at a rate of 44100kHz with 1 channel and the output will be stored as a .wav file. Recordings will be done for each single note and one at a time. The .wav file will be overwritten as the program continues.

After the recording is finished, the program will read the .wav file and use the Numpy package to compute the Fast Fourier Transform to this file. For each .wav file, in other words, each time window, the frequency with the highest intensity (power) will be extracted. This frequency will be compared with the corresponding note in the loaded score reference to the fundamental frequency of that target note. The program will then assign the corresponding match status to this note: “good” if the input falls inside the boundary of the target range (boundary set by adjustable threshold value), “high” or “low” if the input falls outside the boundary of the target range.

The match status will be displayed in the middle of the piTFT screen. On the left of the status circle the log for the loaded music score will be displayed. On the right of the status circle the log for the input music notes will be displayed. Each of the logs will consist of the 5 most recent notes. For each iteration, the least recent one will be dropped and the most recent one will be appended to the top; and the match status circle will be refreshed for each iteration.

The iteration consists of the following steps: recording and store, read file, FFT & frequency comparison, piTFT refresh. This process will be cycled, so each note of the loaded music score will be checked in turn.

After all notes be checked and the loop ends, the program will compute the percentage of correctly matched notes (# matched notes / # notes in score). This percentage will be displayed on screen. The current percentage will be compared with the last training session, and the previous percentage will be overwritten by the current one. The program will display corresponding message on the screen based on the user’s performance of the current training session: user made some progress (current percentage > previous percentage), user obtains the same result in this training as in the last training (current percentage = previous percentage), or user regressed since last training (current percentage < previous percentage).

The above checkout screen will be kept displayed for a while, after which the program will return to the initial screen and wait for the user to press the start button again to start the next round of training.

Testing

To verify the correctness of the project, the following components need to be tested: recording, file access, FFT, and the computing power consumed by the program on the Raspberry Pi.

The USB mic was first tested with command line commands to verify the hardware address and the availability. Then, it was tested with python code and Pyaudio pack. It was verified that the USB mic is functional via Pyaudio pack to record sound clips and store them into .wav files using wave pack. The sound recorded in the file was checked to be complete (covering the set time window) and clear. It was also tested and verified that python is able to read the .wav files using wave functions.

The Fast Fourier Transform was tested and proven to be correct. Synthetic sound clips were generated by some online applications. These applications generate sound at some specific frequencies, which the frequency was specified by the user. Thus, a ground truth value was generated and passed through the Numpy FFT functions. First, it was verified that the peak frequency (peak in Hz) of a sound clip can be found and transformed back into a unit of Hertz. Next, it was tested and proved that the correct frequency with the highest intensity (power) can be found and exported in a unit of Hertz. The output frequencies from FFT are very close to the ground truth values; the average error between the FFT output and ground truth is less than ± 0.5 Hz.

Next, instead of using synthetic sound, real piano note sounds were tested. By using online piano keyboard simulators, real piano sound analogues (so called “real sound”) can be generated. These real sounds are different from synthetic ones because for each note, the frequency keeps varying around its fundamental frequency (instead of a pure frequency set by the user in synthetic sounds). Some proven FFT analysis softwares was used to obtain the ground truth value of sample real sound clips. It was noticed that the beginning of a piano note would be higher than the fundamental frequency, and keep decaying in frequency until it disappeared.

By extracting the highest intensity frequency from a sound clip, the supposed fundamental frequency of the note can be specified by the FFT calculations. However, when playing notes lower than F4, some high peaks in the frequency domain will have the highest intensity instead of the fundamental frequency of that note. This phenomenon was suspected to be the superposition of waves. For a real piano, when the keys are pressed and the strings are struck, the frequency produced is different at different moments and this frequency decays over time until it disappears. The position of the strings inside the piano varies, and at certain positions some low frequency frequencies are superimposed and eventually a higher frequency appears. This high frequency has been observed experimentally to be on average 2 to 3 times higher than its proper fundamental frequency. Plus, this high frequency will have a strong intensity (power).

It is difficult for the program to analyze the actual correct frequency. Due to time constraints, no corresponding method has been developed to solve the above problems for the time being. The program is limited to working on notes above F4 for the time being. A method is needed to distinguish whether a frequency is a superposition of several lower frequencies (lower notes) or is itself a correct higher frequency (higher notes).

The performance of the program was tested and the computing power demand was also tested. The process of initializing the USB mic and starting recording using Pyaudio takes ~0.15 seconds, and the process of write & read .wav file, compute FFT, and complete all subsequent calculations takes ~0.25 seconds. Such a delay is less perceptible to humans and can be approximated as "real-time". For machines, however, this works in lag rather than in real time.

By using htop command, it was observed that usually the average load per core does not exceed 40% on the 4 cores of the Raspberry Pi 4 used to develop this program. Overall, this program does not require a lot of computing power and can run on much weaker performance platforms.

Drawings

This is an example image

Result

After six weeks all the goals planned in the previous section have been achieved and tested accordingly for verification. The system can now continuously record a specific length of sound through the USB mic, which will then be stored as a .wav file. The system then reads this file and performs an FFT, and compares the frequency with the highest intensity within this clip to the pre-loaded score in turn.

During training the system will display the match status of the current note, and after completing all the notes of the score it will evaluate the overall correctness and compare it with the previous training session.

Some goals of this project were met and basic functions are implemented but still need to be further improved. First, this program cannot work stably at low frequencies: notes lower than F4 (~350Hz). Second, data format conversions are not working well when importing csv files and turning the spreadsheet elements into python music score arrays.

Future Works

The system still leaves a lot to be desired. First, the system does not work in the lower note range at this stage because it has not been able to solve the wave superposition problem (lower than F4). The system needs to have a corresponding scheme to distinguish whether a frequency is superimposed by several frequencies or is itself generated at that frequency. Second, the system can be improved in the way to load the music score. We expect to be able to let the user write a csv file and read this file through python to accomplish the function of loading the score. At this stage, the test is not very good in reading elements in csv files and transforming them into corresponding elements (notes) in a predefined 2D array in turn. The read elements need to be converted in data format, otherwise the correct score will not be loaded in the program.

Conclusion

After six weeks of work, we accomplished and verified the pre-planned goals. The work involved functions for software to interact with hardware and call each other (USB mic), file writing and reading, FFT, Pygame display, and GPIO input functions.

Through this project, we applied the appropriate knowledge learned in the ECE 5725 course and used it to solve the problems we encountered. This knowledge also helped us to further optimize the performance of the system, for example, by structuring the code to achieve faster response times. In addition to what we have learned, we have also been exposed to knowledge that we had not previously gained through this project.

Budget

Raspberry Pi 4 — included

PiTFT screen — included

USB microphone — $10

Total budget for the project = $10

Reference

1) AvizzSAvizzS 2111 silver badge33 bronze badges. (1966, September 1). How do I find the peak frequency of a WAV file in python? Signal Processing Stack Exchange. Retrieved December 9, 2022, from https://dsp.stackexchange.com/questions/60045/how-do-i-find-the-peak-frequency-of-a-wav-file-in-python

2) Fundamental frequencies of Notes in Western Music | Auditory Neuroscience. (n.d.-b) http://auditoryneuroscience.com/pitch/fundamental-frequencies-notes-western-music

3) Hrisko, J. (2022, November 16). Recording Audio on the Raspberry Pi with Python and a USB Microphone. Maker Portal. https://makersportal.com/blog/2018/8/23/recording-audio-on-the-raspberry-pi-with-python-and-a-usb-microphone

4) Search — NumPy v1.23 Manual. (n.d.). https://numpy.org/doc/stable/search.html?q=fft

5) Fourier Transforms (scipy.fft) — SciPy v1.9.3 Manual. (n.d.).https://docs.scipy.org/doc/scipy/tutorial/fft.html

6) Read and write WAV files. (n.d.). Python Documentation.https://docs.python.org/3/library/wave.html

7) PyAudio. (2022, July 19). PyPI.https://pypi.org/project/PyAudio/

8) ECE 5725 Lab works: lab2, lab3. (pygame display, GPIO input)

Code Appendix

# Start Recording stream = p.open(format=pyaudio.paInt16, channels=1, rate=44100, input_device_index=1, input=True, frames_per_buffer=4096) init_time = time.time() - start print('RECORDING START') ## FFT functions # write .wav file (recordings) wavefile = wave.open('test9.wav', 'wb') wavefile.setnchannels(1) wavefile.setsampwidth(p.get_sample_size(pyaudio.paInt16)) wavefile.setframerate(44100) wavefile.writeframes(b''.join(frames)) wavefile.close() # read input .wav file rate, data = wav.read('test9.wav') # compute FFT on input data fftdata = fft(data) # convert to frequency domain in Hz freqs = np.fft.fftfreq(len(fftdata)) # Return the Discrete Fourier Transform sample frequencies # Find the greatest intensity frequency max_power = fftdata*1j max_index = np.argmax(np.abs(max_power)) freq = freqs[max_index] Fhz = abs(freq * 44100) # unit convertion -> Hz

Contact

For inquiries about the design project or general questions about the website please contact.

Instructor/Advisor

Name: Prof. Joseph Skovira

Email: jfs9@cornell.edu

Members:

Name: Jinxuan Wang

Email: jw2552@cornell.edu

Name: Haolin Li

Email: hl992@cornell.edu

Thank you for visiting our design project report website. We hope you found the information useful and we welcome your feedback.

Acknowledgement

We would like to express our sincere gratitude to our advisor, Prof. Joseph Skovira, for his invaluable guidance and support throughout the duration of this project. His expertise and knowledge have been instrumental in helping us design and implement our project, and we are deeply grateful for his guidance and support. We also appreciate his willingness to always provide us with prompt and helpful feedback, as well as his patience and understanding throughout the process. We would not have been able to complete this project without his support, and we are truly grateful for all of his contributions. Thank you, Prof. Skovira, for your invaluable guidance and support.

We would like to express our gratitude to Cornell University for providing us with the resources and support needed to complete this project. We are grateful for the opportunities and education that Cornell has provided us, and we are proud to be part of the Cornell community.